Chapter 1

The First Thinking Machines

Gaak was missing. No one knew what it was thinking or if it was thinking at all. The only thing that robotics expert Noel Sharkey knew was that the small robotic unit he had just repaired had disappeared. Gaak had been injured in battle during a "survival of the fittest" demonstration at the Living Robots exhibition in Rotherham, England, in 2002. In this contest predator robots sought out prey robots to drain their energy, and prey robots had to learn to avoid capture or be inactivated.

Gaak, a predator robot, may have had enough of the competition. Only fifteen minutes after Sharkey left the robot's side, the autonomous machine forced its way out of its corral, sidled past hundreds of spectators, maneuvered down an exit ramp, and left the building. Gaak's bid for freedom was stopped short when it was almost run over by a car as it fled toward the exit gate.

Although this may sound like another sci-fi nightmare, it is actually an example of artificial intelligence at work today. AI is the study and creation of machines that can perform tasks that would require intelligence if a human were to do the same job. This emerging and constantly changing field combines computer programming, robotics engineering, mathematics, neurology, and even psychology. As a blend of many sciences, AI has a scattered history. It has almost as many processes as there are researchers active in the field. Like the limbs of a tree, each new idea spawns another, and the science of artificial intelligence has many branches. But its roots were planted by scientists and mathematicians who could imagine all the possibilities and who created amazing machines that startled the world.

The Analytical Engine

The first glimmer of a "thinking machine" came in the 1830s when British mathematician Charles Babbage envisioned what he called the analytical engine. Babbage was a highly regarded professor of mathematics at Cambridge University when he resigned his position to devote all of his energies to his revolutionary idea.

In Babbage's time, the complex mathematical tables used by ship's captains to navigate the seas, as well as many other intricate computations, had to be calculated by teams of mathematicians who were called computers. No matter how painstaking these human computers were, their tables were often full of errors. Babbage wanted to create a machine that could automatically calculate a mathematical chart or table in much less time and with more accuracy. His mechanical computer, designed with cogs and gears and powered by steam, was capable of performing multiple tasks by simple reprogramming—or changing the instructions given to the computer.

The idea of one machine performing many tasks was inspired by the giant industrial Jacquard looms built by French engineer Joseph-Marie Jacquard in 1805. These looms performed mechanical actions in response to cards that had holes punched in them. Each card provided the pattern that the loom would follow; different cards would instruct the loom to weave different patterns. Babbage realized that the instructions fed into a machine could just as easily represent the sequence of instructions needed to perform a mathematical calculation as it could a weaving pattern.

Babbage's Difference Engine

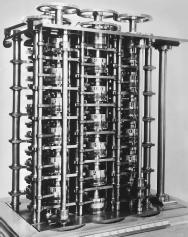

Although no one may ever see a real example of Charles Babbage's ingenious Analytical Engine, a Difference Engine was re-created in the 1990s from Babbage's original drawings by a team of engineers at London's Science Museum. Made of cast iron, bronze, and steel, the workable machine stands ten feet wide and six feet high and weighs three tons. The Difference Engine performs mathematical computations that are accurate up to thirty-one digits. But unlike the Analytical Engine, Babbage's Difference Engine is powered by hand. The computer operator has to turn a crank hundreds of times to perform one calculation.

His first attempt was called the Difference Engine. It could translate instructions punched on input cards into arrangements of mechanical parts, store variables in specially positioned wheels, perform the logical operations with gears, and deliver the results on punched output cards. Only one small version of the Difference Engine was created before Babbage turned his attention to a more ambitious machine that could perform more abstract "thinking."

Babbage's second design was a larger machine called the Analytical Engine. It was designed to perform many different kinds of computations such as those needed to create navigational tables and read symbols other than numbers. His partner in this venture was Lady Ada Lovelace, daughter of the poet Lord Byron. Unlike her father, who had a talent for words, the countess had a head for numbers. She is often credited with inventing computer programming, the process of writing instructions that tell a computer or machine what to do. Of Babbage's machine she said, "The Analytical Engine weaves algebraical patterns just as the Jacquard Loom weaves flowers and leaves." 1

Unfortunately Lovelace was never able to program one of Babbage's machines. As a result of financial problems and the difficulty of manufacturing precision parts for his machine, Babbage had to abandon his project. Almost a century and a half would pass before a similar machine was assembled. Seldom has such a long time separated an idea and its technological application.

The Turing Machine

The next and perhaps most influential machine to mark the development of AI was, once again, never even built. It was a theoretical machine, an idea that existed only on paper. Devised by the brilliant British theoretical mathematician Alan Turing in 1936, this simple machine consisted of a program, a data storage device (or memory), and a step-by-step method of computation. The mechanism would pass a long thin tape of paper, like that in a ticker-tape machine, through a processing head that would read the information. This apparatus would be able to move the paper along, read a series of symbols, and produce calculations based on the input on the tape. The so-called universal computer, or Turing machine, became the ideal model for scores of other researchers who eventually developed the modern digital computer. Less than ten years later, the three most powerful nations in the world had working computers that played an integral part in World War II.

The Giants of the Computer Age

Teams of British mathematicians, logicians, and engineers created a computer called Colossus, which was used to decode secret enemy messages intercepted from Germany. The advantage of Colossus was that

the British military did not have to wait days for a team of human decoders to unravel secret plans. Colossus could decode messages within hours. It helped save lives and was a key factor in the Allied forces' defeat of the Germans.

In the meantime, German mathematicians and computer programmers had created a computer to rival Colossus. Called Z3, the German computer was used to design military aircraft. The United States also entered the computer age with the development of a computer called the Electronic Numerical Integrator and Computer, or ENIAC, which churned out accurate ballistic charts that showed the trajectory of bombs for the U.S. Navy.

ENIAC, Z3, and Colossus were monsters compared to modern-day computers. ENIAC weighed in at thirty tons, took up three rooms, and consisted of seventeen thousand vacuum tubes that controlled the flow of electricity. However, these tubes tended to pop, flare up, and die out like fireworks on the Fourth of July. Six full-time technicians had to race around to replace bulbs and literally debug the system: Hundreds of moths, attracted to the warm glow of the vacuum tubes, would get inside the machine and gum up the circuits.

The Binary Code

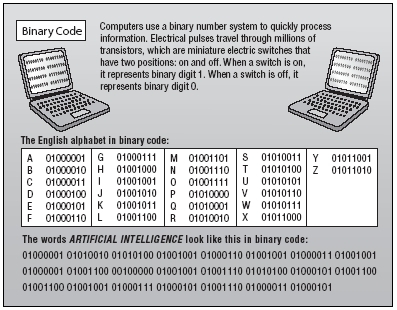

ENIAC and all other computers that have followed have spoken the same basic language: the binary code. This is a system of symbols used to program a computer. Proposed by U.S. mathematician Claude Shannon and expanded on by Hungarian-born mathematician John von Neumann in the 1940s, the binary code is a language with only two symbols, 0 and 1. Shannon and von Neumann showed that the simplest instruction was yes/no, or the flicking on and off of a switch, and that any logical task could be broken down to this switching network of two symbols. The system is binary, based on two digits, and combinations of these two digits, or bits, represent all other numbers.

Inside the computer, electrical circuits operate as switches. When a switch is on, it represents the binary digit 1. When a switch is off, it represents the binary digit 0. This type of calculation is fast and can be manipulated to count, add, subtract, multiply, divide, compare, list, or rearrange according to the program. This simple digital system can be used to program a computer to do even the most complex tasks. The results of electrical circuits manipulating strings of binary digits are then translated into letters or numbers that people can understand. For instance, the letter A is represented by the binary number 01000001. Today, by reading and changing binary digits, a machine can display a Shakespearean sonnet, play a Mozart melody, run a blockbuster movie, and even represent the entire sequence of human DNA.

Smaller and Faster

The basic digital language of early computers was fast, but the hardware that performed the tasks was not. Vacuum tubes were unreliable and broke down frequently. Computing was made faster with transistors, a crucial invention developed in the 1950s. A transistor is a miniature electronic switch that has two operating positions: on and off. It is the basic building block of a computer that enables it to process information. Transistors are made from silicon, a type of material that is called a semiconductor because certain impurities introduced to the silicon affect how an electrical current flows through it.

These early transistors were lighter, more durable, and longer lasting. They required less energy and did not attract moths, as ENIAC's vacuum tubes did. They were also smaller, about the size of a man's thumb. And smaller translated to faster. The shorter the distance the electronic signal traveled, the faster the calculation.

A Science Gets a Name

In pursuit of a machine that could pass the Turing Test, a group of researchers held a conference in 1956. Called the Dartmouth Conference, it was an open invitation to all researchers studying or trying to apply intelligence to a machine. This conference became famous not necessarily because of those who attended (although some of the most well-known AI experts were there) but because of the ideas that were presented. At the conference the researchers put forth a mission statement about their work. Quoted in Daniel Crevier's 1993 book AI: The Tumultuous History of the Search for Artificial Intelligence , the mission statement said, "Every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it." And the researchers came up with a name for their pursuits that became accepted worldwide and solidified a science—artificial intelligence.

The next improvement came with the invention of the integrated circuit, an arrangement of tiny transistors on a sliver, or chip, of silicon that dramatically reduced the distance traveled. The amount of information held in a given amount of space also increased drastically. A vacuum tube, for example, could fit one bit of information in a space the size of a thumb. One of the first transistors could hold one bit in a space the size of a fingernail. Today a modern silicon microchip the size of a grain of rice can contain millions of bits of information. These rapid improvements in computing technology allowed for rapid advancements in the pursuit of artificial intelligence.

The Turing Test

In 1950 Alan Turing solidified his place as the grandfather of AI with his paper "Computing Machinery and Intelligence." The now-famous report claimed that computing technology would one day improve to the point where machines would be considered intelligent. He knew this claim would be difficult to prove, so he also put forth the idea of a standardized test he called the Imitation Game. Now known as the Turing Test, it is set up in the following manner: An interrogator or judge sits in front of two computer terminals. One terminal is connected to a person in another room; the other terminal to a computer in a third room. The interrogator types questions on both terminals to try to figure out which terminal is controlled by a human and which is controlled by a machine. If the interrogator cannot decide which contestant is human, or chooses incorrectly, then the computer would be judged intelligent.

Turing's paper and theoretical test was another milestone in the development of AI. He predicted that by the year 2000 a computer "would be able to play the imitation game so well that an average interrogator will not have more than a 70 percent chance of making the right identification (machine or human) after five minutes of questioning." 2

The year 2000 has come and gone and no machine has yet passed the test. But the lack of a winner has not deterred AI programmers in their quest. Today an annual contest called the Loebner Prize offers $100,000 to the creator of the first machine to pass the Turing Test.

Some people argue that the Turing Test must be flawed. With all that artificial intelligence can do, it seems illogical to them that a machine cannot pass the test. But the importance of the Turing Test is that it gives researchers a clear goal in their quest for a thinking machine. Turing himself suggested that the game of chess would be a good avenue to explore in the search for intelligent machines, and that pursuit led to the development of another machine that made early AI history.

Deep Blue

The complex game of chess involves intellectual strategy and an almost endless array of moves. According to one calculation there are 10 120 (or 10 followed by 120 zeros) possible moves. In comparison, the entire universe is believed to contain only 10 75 atoms. Of course, no player could consider all possible moves, but the best players are capable of thinking ahead, anticipating their opponent's play, and instantaneously selecting small subsets of best moves from which to choose in response. Prompted by Turing's suggestion that chess was a good indicator of intelligence, many AI groups around the world began to develop a chess-playing computer. The first tournament match that pitted a computer against a human occurred in 1967. But it was not until the 1980s that computers became good enough to defeat an experienced player. A computer called Deep Thought, created by students at Carnegie Mellon University in Pittsburgh, beat grand master Brent Larsen at a single game in 1988.

A team from IBM took over Deep Thought, reconfigured its programming, dressed it in blue, and renamed the new chess-playing computer Deep Blue. Deep Blue's strength was sheer power: It was so fast it could evaluate 200 million positions per second and

look fifteen to thirty moves ahead. Its human opponent, the world chess champion Gary Kasparov of Russia, could consider only three moves per second. Deep Blue, outweighing its human opponent by almost a ton, won its first game in 1996. Kasparov fought back and managed to win the match four games to two. A rematch held on May 10, 1997, again pitted a souped-up Deep Blue against Kasparov. Deep Blue won every game in the match. In an interview afterward, Kasparov admitted that he "sensed a new kind of intelligence" 3 fighting against him.

Shakey the Robot

While some researchers were making headlines playing computer chess, others were exploring the combination of robotics and AI. In 1969 Nils Nilsson at Stanford University created an early AI robot called Shakey. This five-foot-tall boxy robot earned its nickname from the video camera and TV transmitter mast extending from its top, which shook back and forth as the robot moved. Shakey's world was limited to a set of carefully constructed rooms. It was programmed with an internal map of the dimension and position of every object in the rooms so that it would not bump into anything. Shakey moved around at the snail-like speed of one foot every five minutes, stopping periodically to "see" its environment through the camera lens, which transmitted images to the computer. It

would then "think" of its next move. If someone came in and moved objects in the room while Shakey was "thinking," the robot would not notice and inevitably bump into the moved objects.

Shakey's program was so immense that it could not carry the necessary computer power around with it. It had to be attached by a thick cable to its electronic brain, located in another room. Because of its tethered existence and programmed environment, Shakey could not function outside of its own little world. But its importance lay in what it taught AI researchers about intelligence and the real world.

Machines like Deep Blue and Shakey represent the traditional era of AI. Traditional AI specialists thought—and some still do think—that human intelligence is based on symbols that can be manipulated and processed. Intelligence was equated with knowledge. The more facts people or computers knew, the more intelligent they were. That is why early AI projects focused on things that most people, including college professors, found challenging, like playing chess, proving mathematical theorems, and solving complicated algebraic problems.

Early AI researchers believed that the human brain worked like a computer, taking in information and converting it to symbols, which were processed by the brain and then converted back to a recognizable form as a thought. Every aspect of Shakey's world was programmed using the digital binary code so that the machine could perceive changes and operate within its mapped world. Deep Blue's chess program was created the same way. This traditional AI, although very rigid, had amazing success. It was the basis of programs called expert systems, the first form of AI put to practical, commercial use.

Expert Systems

An expert system is an AI program that imitates the knowledge and decision-making abilities of a person with expertise in a certain field. These programs provide a second opinion and are designed to help people make sound decisions. An expert system has two parts—a knowledge base and an inference engine.

The knowledge base is created by knowledge engineers, who interview dozens of human experts in the field. For example, if an expert system is intended to help doctors diagnose patients' illnesses, then knowledge engineers would interview many doctors about their process of diagnosing illnesses. What symptoms do they look for, and what assumptions do they make? These questions are not easy to answer, because a person's knowledge depends on something more than facts. Often experts use words like intuition or hunch to express what they know. Underlying that hunch, however, are dozens of tiny, subconscious facts or rules of thumb that an expert or doctor has already learned. When those rules of thumb are programmed into a computer, the result is an expert system. "Real [stock market] analysts think what they do is some sort of art, but it can really be reduced to rules," 4 says Edgar Peters of PanAgora Asset Management. This is true for most decision-making procedures.

The second part of an expert system, the inference engine, is a logic program that interprets the instructions and evaluates the facts to make a decision. It operates on an if-then type of logic program—for example, "if the sun is shining, then it must be daytime." Every inference engine contains thousands of these if-then instructions.

The first expert systems were used in medicine, business, and finance to help doctors and business managers make better decisions. Expert systems are still used today in the stock market, banking, and military defense.

Intelligence or Imitation?

Traditional AI represents only one type of intelligence. It is very effective at applying knowledge to a single problem, but over time, it became apparent that traditional expert systems were too limited and specialized. A chess program like Deep Blue could play one of the most difficult strategy games in the world, but it could not play checkers or subtract two-digit numbers, and it performed its operations without any understanding of what it was doing.

In 1980 John Searle, a U.S. philosopher, described artificial intelligence with an analogy he called the Chinese room. Suppose an English-speaking person is sitting in a closed room with only a giant rule book of the Chinese language to keep him company. The book enables the person to look up Chinese sentences and offers sentences to be used in reply. The person receives written messages through a hole in the wall. Using the rule book, he looks up the sentences, responds to them, and slips the response back through the hole in the wall. From outside the room it appears that the person inside is fluent in the Chinese language, when in reality, the person is only carrying out a simple operation of matching symbols on a page without any understanding of the messages coming in or going out of the hole.

Searle's analogy made researchers wonder. Was AI just an imitation of human intelligence? Was intelligence simply the processing of information and spitting out of answers, or was there more to it than that?

I was drawn to this after watching the new smash-hit Benedict Cumberbatch movie "The Imitation Game". So incredible that someone from the 19th Century with such primitive resources decided on building an "Analytical Engine" which was over 100yrs ahead of its time!

Charles Babbage & Alan Turing - The Grandfathers of Artificial Intelligence.